How the Corrosion of Trust Fuels Conspiracy Theories

In early May, several weeks after the Covid-19 crisis had spread beyond China and its neighbours, a 25-minute video entitled "Plandemic" went viral online. It was viewed 8 million times in seven days on YouTube alone.

Widely shared on Facebook, YouTube and Vimeo before major platforms banned it, it insinuated that the Covid-19 pandemic was created by Bill Gates, the World Health Organisation (WHO), and Big Pharma to promote “genocidal” vaccination programmes designed to depopulate the planet.

Meanwhile, in the US, another story gained ground—a theory labelled “Mole Children,” which holds that the pandemic is a hoax to distract from a clampdown on members of the so-called “Deep State” (which includes Hillary Clinton), to release children they are holding as sex slaves.

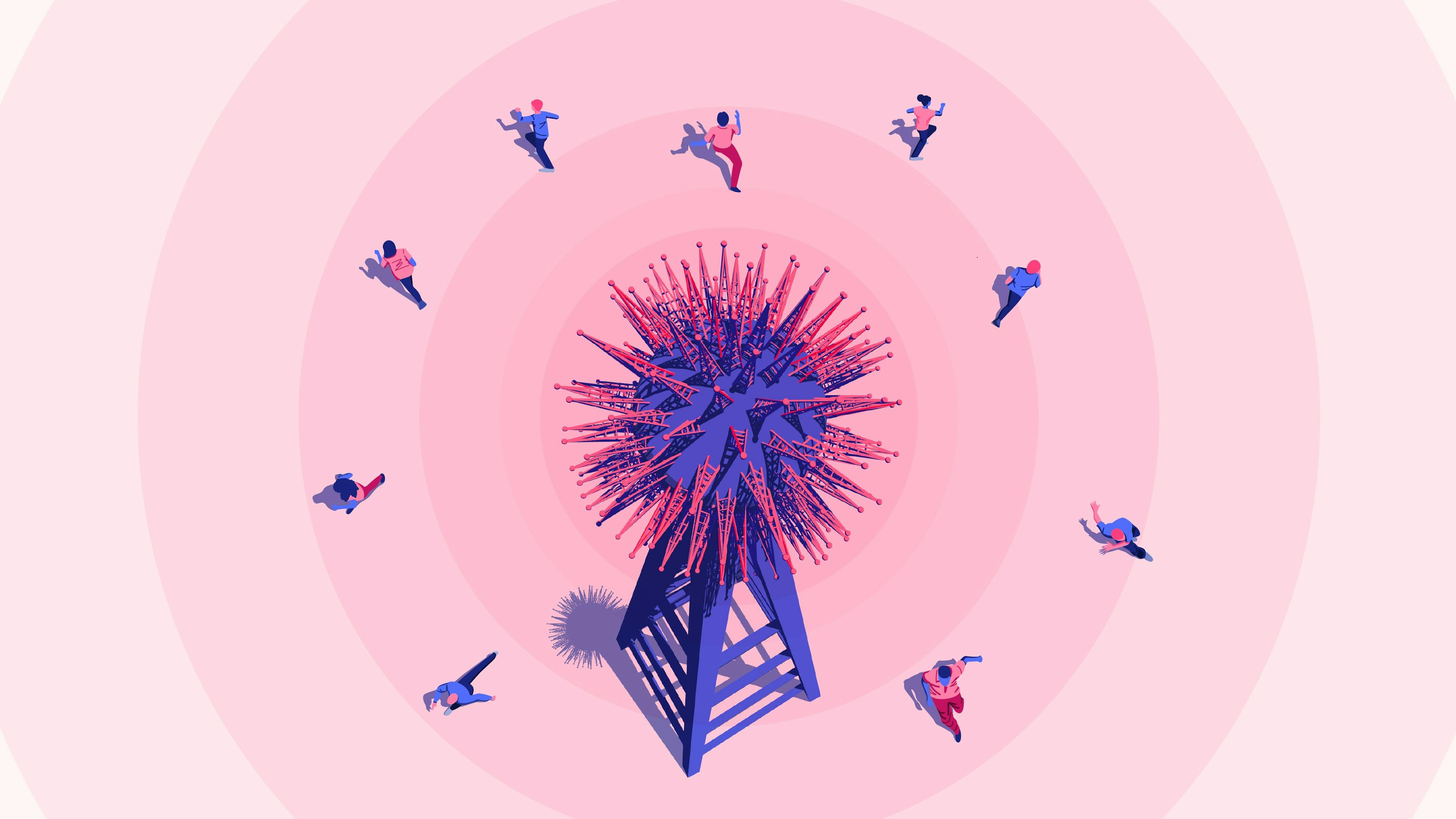

And in the UK, the 5G conspiracy theory—itself a strange, chaotic combination of several widely-disputed theories—was among the most common forms of online misinformation in April 2020.

Illustration: a 5G tower from which people, afraid of contracting Covid-19, seek to escape.

Conspiracy theories are on the rise, boosted by the spread of fake news and unravelling global crises.

A study of online conversations by Yonder showed that the time it takes for fringe narratives to go mainstream descended from 6-8 months, to 14 days during the current Covid-19 pandemic. The rapid increase in our online presence—combined with homeschooling, and restrictions on movement and social distancing—are ideal conditions for conspiracy theories to thrive.

This is true equally for those of us who now spend more time online (due to remote working), as well as core workers, who, whilst working on the front lines, must restrict social interactions to the digital sphere.

And conspiracy theories are more widely held than many are willing to believe. A recent poll by The Atlantic finds that just 9% of respondents did not agree with one of the 22 conspiracy theories related to Covid-19.

The prevalence of disinformation related to the virus may be a result of how the pandemic has mobilised two prominent strands of online conspiracy theories—political and scientific— which represent 52% and 13%, respectively, of conspiratorial YouTube recommendations (pdf).

But what makes these theories so seductive? One popular explanation of why we believe false information refers to so-called "motivated reasoning," the theory that we are more likely to believe statements that align with our beliefs.

Many already distrust the government and believe that large technology corporations are up to no good. The leap from there, to Bill Gates being "behind" the pandemic, is not large. And while some of us may be more vulnerable to conspiratorial content, digital technologies and environments create new opportunities for conspiracy theories to spread and have a significant impact on our digital lives.

Illustration: A person takes a smartphone photo of the Venetian canals. Dolphins appear in the image on the phone, but are not there in reality.

TECHNOLOGY TRANSFORMS TRUST IN INFORMATION

At core, conspiracy theories are stories about power, and as such, tend to rely on a distinction between the people (us) and a corrupt and secretive elite (them). Conspiracy theorists want us to take action, and at the very least, mobilise others to spread the “truth.”

Central to these sets of beliefs is the conviction that mainstream institutions, including institutional knowledge, have been compromised and cannot be trusted. The prevalence of, and speed at which, conspiracy theories spread online depends therefore on two primary factors: the level of trust in information and the rate at which misinformation can spread.

What happens if we can no longer trust our eyes and ears? Most people are familiar with the phenomenon of fake news. It is now more common than just a few years ago to question online content.

Yet so many of us believed the story about the dolphins “returning” to Venice's canals, for example. The story went viral on Instagram and TikTok, and was even reported on national news channels. It took an intervention from National Geographic to debunk this, as well as many other Covid-19 animal stories.

Why was the dolphin story more powerful? One explanation is that we are more likely to believe something that “looks right.” In particular, photos and videos are easy to digest and tend to encourage much less scrutiny. It is natural to believe what our eyes can see. Yet digital technologies are helping create increasingly convincing fakes—a point illustrated by Google when the company released 3000 facial images that were deep fakes in 2019.

The ease with which both information and misinformation can now be shared through technology certainly played a role in the rapid increase of this trend. YouTube is the primary tool for spreading conspiracy theories, with evidence that the platform’s algorithms continued to recommend conspiratorial content to viewers who previously watched similar videos (pdf).

Furthermore, dissemination of fake or misleading content through algorithms opens terrifying possibilities for online bots. According to Deeptrace Labs' 2019 State of Deep Fakes Report, bots are being created with identities stolen from social media, to make fake accounts or news items look more believable. Names, photos and other details are copied from real people and used to spread spam, amplify posts and spread disinformation.

While we may find this sinister, recommendation algorithms used by platforms do not significantly differ from human behaviour. An MIT study of 4,5 million tweets, "The Spread of True and False News Online," led by Soroush Vosoughi, Deb Roy, and Sinan Aral, and published in Science Magazine in 2018, found that Twitter users were 70% more likely to share falsehood than facts.

The study examined the trajectories of 126,000 stories, tweeted by approximately 3 million users, and found that falsehood diffused significantly faster and more broadly than the truth. Political information suffered this effect more than stories about terrorism, natural disasters or other topics.

In addition, novel content and high-emotion stories—those that make us angry, amused, anxious or disgusted—are significantly more likely to be shared. In this sense, conspiracy theories rely on some of the same tactics as clickbait.

Illustration: A robot, posing as a human, uses a social network.

THE FUTURE OF TRUST

The future prevalence of online conspiracy theories will depend largely on the levels of trust in institutions and structures that hold power over information and enforcement. Two core dimensions impact the future of conspiracy theories, based on the changes in the structure of trust in the digital world.

STATE PRESENCE ONLINE

Crises have always provided abundant breeding grounds for conspiracy theories, but equally, they are often an opportunity for states and entities that act as extensions of the state to curb freedom of speech. This is because crises allow governments to suspend normal democratic processes and govern through a formal or informal state of emergency.

This propensity of crises to take us out of the framework of "normal" politics creates a threat of the state becoming more "interventionist" in the digital world. Perhaps this partly explains the sudden urgency with which social media platforms attempted to control misleading information on the novel coronavirus.

Much depends on how long the current “state of emergency” will last. The political aftermath of the pandemic is likely to be unevenly distributed not just economically, but also in terms of trust in public institutions. In some countries, the emergency will serve as an opportunity for the government to seize new powers. This is particularly true about countries with new or weak democracies—where their impact on the stability of institutions can be significant.

However, direct state intervention into our digital lives is not the only concern. Regimes find it easier to manipulate online content than block it. For instance, Russia actively spread the 5G theory, and its state media recently engaged in publicising false claims about the Italian government allegedly condemning Bill Gates for a violation of human rights with regards to creating the Covid-19 crisis.

This claim was based on a video that was widely shared by 5G conspiracy theorists, which showed an Italian MP in Parliament making a speech condemning Bill Gates. However, contrary to how the video was portrayed, the speech did not represent the view of the government or indeed any party in Italy.

INDIVIDUAL ACCOUNTABILITY FOR TRUTH

As the technology to create and disseminate misleading and conspiratorial content evolves, digital market players and platforms will have to make increasingly difficult choices about freedom of speech. These choices are already being made, but the mandate is unclear. The processes also remain inefficient, since internet users currently function as both citizens of the digital world, and consumers.

For example, when multiple Twitter users reported a genuine biotech company that advertised its UV-based “Healight” on Twitter, the platform disabled the company’s account, following backlash against Donald Trump’s statements regarding the use of disinfectant and UV light for Covid-19.

In areas to which state intervention does not extend, the burden of effective fact-checking rests on the individual. But with the cost of verification of information rising as technologies that help create fake content become more advanced, the ability to verify content may itself become a commodity. Access to technologies that allow us to discern between genuine and fake content may become even more unequal.

This in itself would result in both an increase in conspiratorial content, as well as increased inequality of opportunities online and offline.

THE CONSEQUENCES OF A DIGITAL WORLD WITHOUT TRUST

Trust is fundamental to social and economic relations. To trust someone or something is to be able to act in conditions of uncertainty. In this sense, it remains a crucial human characteristic in times of crisis.

Technology can be a source of trust, as was the case with the rise of platforms such as Uber, or Amazon, which connected buyers and sellers who might otherwise not have transacted. How many of us would get into a car with a stranger before Uber introduced a system where cars could be tracked and drivers reviewed?

Yet we have seen trust in government, mainstream media and financial institutions remain low over recent years, including during the current crisis. It is therefore not difficult to imagine a future where the digital world suffers from a systemic lack of trust.

But what are the technological and social consequences?

Promotional image for Aftermath VR: Euromaidan.

One obvious consequence is that technology will be employed to fight disinformation. Aftermath VR is a “documentary-game” that employs virtual reality to fight misinformation and conspiracy theories regarding the Euromaidan protests in Ukraine. Players can relive the events from the perspective of protesters in a high-fidelity reproduction. Such technology opens new horizons for what it may mean to teach, or indeed “create” history, in the future.

The effectiveness of Aftermath VR at fighting misinformation has not been formally studied, but the concept of using games to “vaccinate” players against fake news was tested by researchers at the University of Cambridge, who launched "Bad News." The online game allows users to play the role of a fake news tycoon, whose aim is to maximise Twitter followers. It was shown to have a moderate effect on players in building resistance to the tactics of fake news purveyors.

Initiatives like these may prove critical to fighting online misinformation in the future, since limiting and banning content has proven challenging. For instance, when YouTube started its crackdown on conspiratorial content, much of it seems to have simply moved to UgeTube—a new platform that does not censor content and, in our observation, has become a go-to place for conspiracy theories.

So much fake content, in a society with no trust in institutions, makes evidence impossible to believe. Take the case of the video of the Gabon President’s New Years address. The recording was disputed as a deep fake—leading to speculations about the President’s death, which almost culminated in a coup. Yet he was very much alive.

As mentioned earlier, conspiracy theorists advance the belief that mainstream institutions, including institutional knowledge, are compromised and cannot be trusted. But a culture without trust amounts to the institutional order becoming compromised anyway.

Trust makes people feel safe and included. When we trust others, we do not fear them. But when trust is shattered, fear easily gets the upper-hand.

The internet is a unique space: once online, we are always moving through someone’s else’s private property. Our data, and therefore our movements, are owned. We function here whilst trusting, perhaps wrongly, the benevolence of the proprietors of those spaces. An ongoing decrease in institutional trust will likely lead to stronger relationships between the state and major technology players, accelerating the monopolisation of the digital world.

What does that mean for us? Maybe that online participation will increasingly rely on hybrid forms of engagement. In the same way biometric tokens move our fingerprints and retina scans into the digital sphere, other parts of the real world may use advanced verification technologies to encourage trust, and enable the creation of “safe zones.”

A more connected future also means a future where more of our lives are subject to the privatised space, governed by rules of governments and large technology giants. Such a world will surely be fertile ground for conspiracy theories to thrive.

Illustration: people using media within a "safe zone": a cordoned-off space shaped like an eye.

20 Oct 2020

-

Michal Rozynek

Illustrations by Debarpan Das

DATA-DRIVEN TECH & SOCIAL TRENDS. DISCOVERED WEEKLY. DELIVERED TO YOUR INBOX.

02/03

Related Insights

03/03

L’Atelier is a data intelligence company based in Paris.

We use advanced machine learning and generative AI to identify emerging technologies and analyse their impact on countries, companies, and capital.