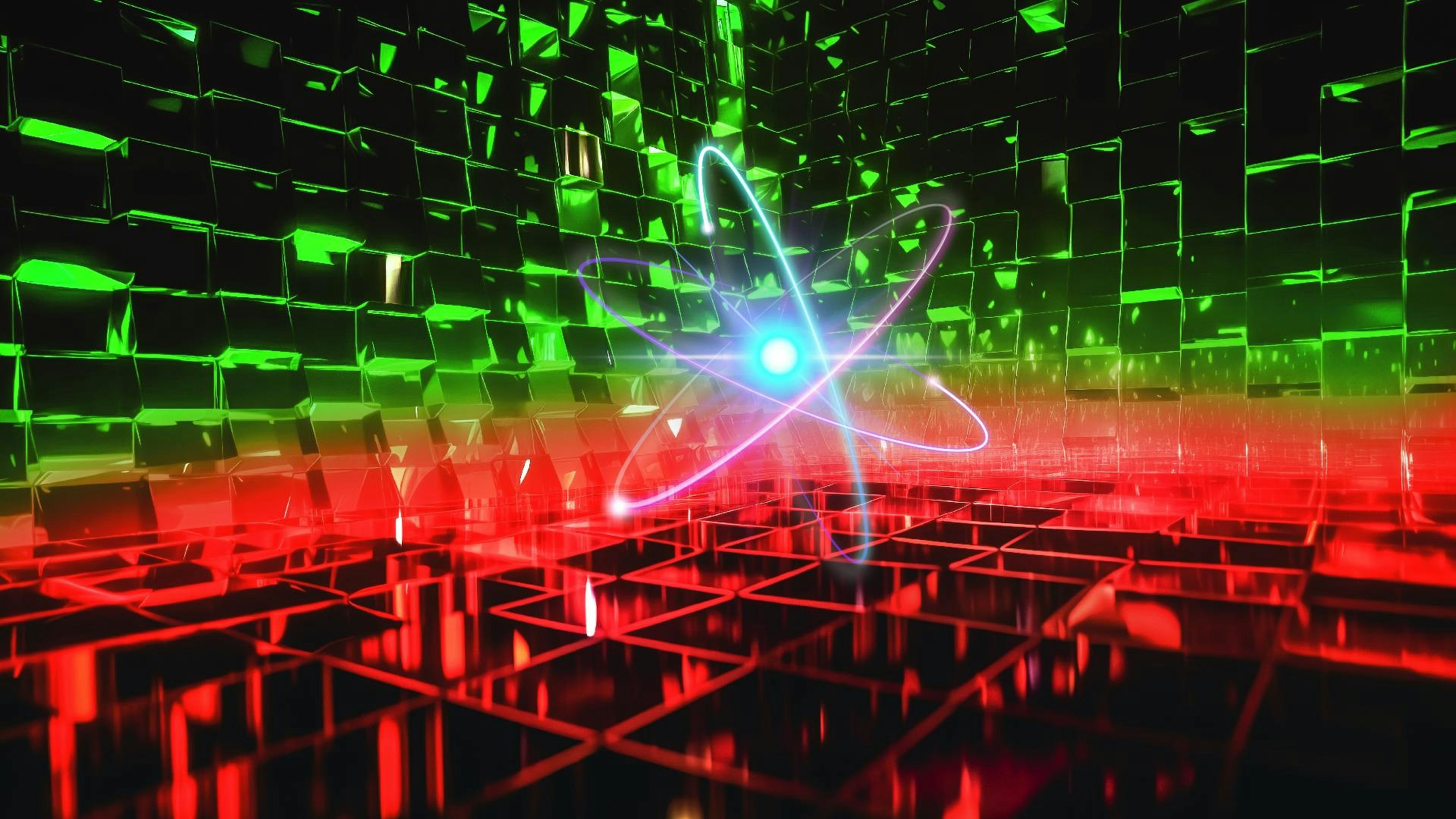

The quantum conundrum: Challenges to getting quantum computing on deck

TOPICS

quantum technologyQuantum computing uses the laws of quantum mechanics to solve problems too complex for classical computers. It could revolutionise fields like cryptography, materials science and drug discovery, just as a start. This future is not just theoretical; it’s a tangible reality, easier to engage with than many think.

IBM offers cloud quantum computing and a developer kit for quantum application development. SpinQ is producing a small-scale quantum computer for research purposes for as little as $5000. These tools make quantum computing accessible to a small but growing audience, allowing developers to write code and run programs on quantum machines without spending tens of millions of dollars for hardware.

Yet despite significant progress, quantum computing is still in its infancy. This article sheds light on the challenges preventing it from becoming part of daily life, and what innovations are pushing the technology forward.

Challenges of quantum computing

Quantum computing has great potential, but its unique challenges hinder mainstream adoption. These mainly revolve around the inherent properties of quantum mechanics, and the practical difficulties of translating them into a computational context.

The three main challenges we'll look at include quantum decoherence, error correction, and scalability. Each is a major hurdle on the road to quantum computing, and must be overcome if the technology is to reach full potential.

Challenge 1: Quantum Decoherence

Quantum decoherence is a fundamental challenge in quantum computing. It refers to the loss of quantum behaviour when a system interacts with its environment. This causes a quantum state to transition into a classical state—a significant obstacle because the time before decoherence occurs limits coherence time, or how long quantum information can be processed and stored.

Quantum computing measures information processing with a metric called the quantum bit, or qubit. A qubit can exist in a superposition of states, unlike classical bits that can only be in one of two states (0 or 1). This superposition allows quantum computers to perform complex calculations faster than classical computers.

Maintaining a coherence state is like balancing a pencil on its tip. Ideally, with no wind or vibrations, the perfectly-balanced pencil would stay upright. This is like a qubit in a quantum state. In the real world, though, any gust of wind or tiny vibration can cause the pencil to fall. That describes quantum decoherence, where minuscule environmental disturbances can cause a qubit to lose its delicate quantum state, like the pencil falling.

Decoherence is problematic because it leads to quantum computation errors. Since the coherence time of a qubit is relatively short, quantum computations must be completed within this timeframe before decoherence occurs.

Increasing qubit coherence time is a significant area of research, but it's only one component to overcoming quantum decoherence overall. Studies exploring superconducting qubits could lead to quantum technologies that better control and improve quantum coherence. Researchers are also considering the use of different materials and designs for qubits with longer coherence times—for example, topological qubits are predicted to have longer coherence times due to their unique properties.

One increasingly popular approach is the development of error correction code. These aim to detect and correct errors caused by decoherence before they can affect computation.

Error correction itself, however, poses a challenge.

Challenge 2: Quantum Error Correction

Quantum error correction (QEC) is vital component to the development of quantum computing. As you've seen, quantum states are inherently fragile, but implementing QEC presents its own issues.

First, error detection and correction in quantum systems must obey the quantum no-cloning theorem, which states that it's impossible to create an identical copy of an arbitrary unknown quantum state. This rule contrasts with classical error correction, where information can be duplicated and checked for errors.

Second, quantum errors can occur in more ways than classical bit errors due to the nature of qubits. A qubit error could be one of two types of flip, or even both, which requires more complex error correction codes.

Still, progress is evident in this realm. The first quantum error correction codes, such as the Shor code (published in 1995) and the Steane code (1996), were designed to correct arbitrary errors in a single qubit. Unfortunately, these codes required a large number of physical qubits to correct a single logical qubit, making them inefficient for practical use.

More recent developments focus on topological quantum error correction codes. These codes take advantage of the properties of qubits arranged in specific patterns, allowing for more efficient error correction with fewer physical qubits.

Surface code has gained popularity in recent years due to its high error threshold and simple implementation. Several experimental groups, including Google, demonstrated error detection using surface code, and ongoing work aims to improve the reliability and scalability of these implementations. Still, achieving fault-tolerant quantum computation, where quantum computations can be performed reliably despite errors, remains a significant challenge.

Challenge 3: Scalability

As the number of qubits in a quantum computer increases, so does its computational power. But scaling quantum computers isn't as straightforward as adding more transistors to a classical computer chip.

In a quantum computer, every qubit must interact with every other qubit to maximise computational power. This requirement becomes increasingly difficult to meet as the number of qubits increases. As the number of qubits increases, so does the likelihood of errors. Errors can be introduced by anything from environmental noise to imperfections in the qubits themselves.

Scalability is a challenge of particular interest for software companies, which obviously have a vested long-term interest in the development of larger, more reliable quantum computers. IBM's Quantum System One is designed to maintain qubit quality even as the system scales.

Research into new types of qubits could lead to quantum computers that are more resistant to errors, making them easier to scale in the first place. Microsoft's Quantum Lab is working on a topological quantum computer that uses anyons, a theoretical particle that only exists in two dimensions. Microsoft created a super thin system, just 120 nanometers thick—nearly 1/700 the diameter of a human hair!—that arguably passes the two-dimensionality test.

The future of quantum computing

A growing focus on the development of quantum algorithms and software leverages the unique capabilities of quantum hardware. These include creating quantum algorithms for solving complex computational problems, and quantum software platforms that can facilitate the development and deployment of those algorithms.

There remains a lot to do. But as our understanding of quantum mechanics deepens and technology evolves, quantum computing's revolutionary potential becomes increasingly apparent. Its challenges are significant, but not insurmountable.

Summing up:

- In the realm of quantum decoherence, advancements in quantum hardware, and the use of different materials and designs for qubits, show promise. Research into superconducting qubits and topological qubits could lead to quantum technologies that better control and improve quantum coherence.

- Quantum error correction codes have made significant strides in tackling quantum error correction. The use of topological quantum error correction codes, like surface code, could yield more efficient error correction with fewer physical qubits. The United States dominates in quantum error correction, both in patent ownership and academic publications. However, interest in the topic is global. China has made a strong showing in both patents and academic publications, indicating a focused national effort in this field.

- On the scalability front, advancements in quantum hardware are paving the way for larger, more reliable quantum computers. IBM has made significant advances in developing quantum processors with increased qubit counts and improved qubit quality.

Researchers and developers worldwide have shown themselves to be unflagging in tackling quantum technology's various conundrums. In fact, the patents held by tech companies is striking: Google, Microsoft, and IBM, all US-based, hold a significant number, indicating the sector's robust interest in quantum computing and specifically quantum error correction.

No doubt quantum computing’s disruptive potential will impact daily life, even before most people will get to see the hardware.

Data on publications and patents courtesy of The Lens.

21 Sep 2023

-

Giorgio Tarraf

Illustration by Thomas Travert.

The future in your inbox, once a week.

02/03

Related Insights

03/03

L’Atelier is a data intelligence company based in Paris.

We use advanced machine learning and generative AI to identify emerging technologies and analyse their impact on countries, companies, and capital.