Crime, punishment, and cyberspace

“The murders were not actual murders, but rather a form of teleportation: head in hands, pockets empty, and any object in hand at the time dropped on the ground at the scene of the crime.”

The quote above comes from a paper presented at The First Annual International Conference on Cyberspace in 1990. Its authors were the creators of Habitat, a first attempt in 1987 to support thousands of live avatars in a shared cyberspace through Quantum Link, an AOL precursor. Habitat users operated a full economy: They ran businesses, started newspapers, fell in love, owned homes, founded religions, and waged wars. With 20.000 adjoining “regions,” its design encouraged democracy.

Until they began to steal, shoot, and stalk … a virtual repetition of Adam and Eve, Romulus and Remus, Cain and Abel.

Guns and weapons arrived. Avatars were decapitated in their homes. A Greek Orthodox priest established a church in-game, where disciples were forbidden from carrying weapons or promoting violent activity. Eventually, a town hall election occurred, and the first Habitat sheriff assumed office until the end of the game’s pilot.

“The more people we involved in something, the less in control we were,” wrote co-creator Chip Morningstar in the paper. “We could influence things, we could set up interesting situations, we could provide opportunities for things to happen, but we could not dictate the outcome. Social engineering is, at best, an inexact science…”

On a Monday night in 1993, LambdaMOO, a text-based virtual world governed by members, found itself in a cyberspace conundrum after a user leveraged a “voodoo doll” subprogram to defile unsuspecting members with heinous sex acts in virtual public space. Journalist Julian Dibbell depicted the events in a 1993 Village Voice article, “A Rape in Cyberspace,” introducing terms like platonic-binary or mind-body split—ways of conveying how virtual world characters become divisible extensions of self.

Dibbell chronicled the aftermath. LambdaMOO members traversed a collective trauma, leading to changes within the world’s design. Before the incident, creator Pavel Curtis released a "New Direction" document, stating engineers were technicians who merely implement community decisions. It ended with an arbitration system: Users could file suit against one another. But all this merely emphasised the absence of formal definitions, including rules against sexual violence.

Successor worlds are no less peaceful. In 2007, German authorities investigated simulated child molestation and child pornography distribution in Second Life. One player sold child pornography, and paid for sex with underage players or those posing as minors. An ex-employee claimed the company had significant security flaws, and turned a blind eye to simulated sex acts involving children. Reports of fraud and money laundering also surfaced.

In 2016, the first investigative article on VR-based sexual harassment appeared; in 2021, we learned groping was an issue in would-be metaverses.

With an expected €1,6 trillion boost to the global economy by 2035 (per PWC data from 2020), and 25 percent of people expected to spend at least an hour daily in a metaverse, the question lingers of who or what will be responsible for law and order.

For three decades, cyberspace governance has functioned like a game of roulette, alternating seemingly randomly between traditional real-world law enforcement, developers, and self-assembled groups of voluntary users. With continued metaverse evolution, a better grasp of who rules, and how, presses.

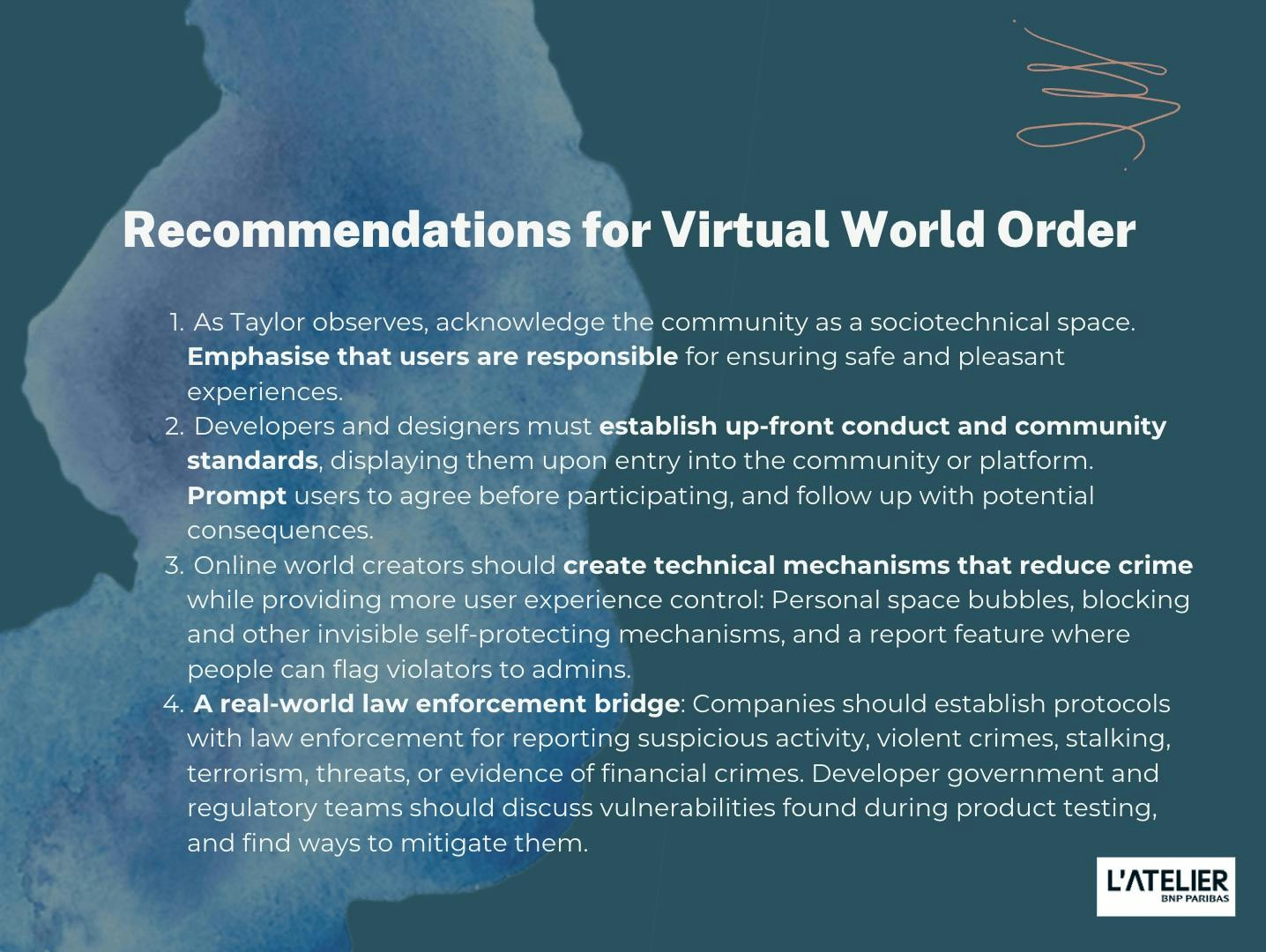

TL Taylor, professor of Comparative Media Studies at MIT and director of the MIT Game Lab, emphasises sociotechnical approaches to online communities. Taylor says developers should understand virtual worlds as “embodied spaces” after 30-plus years of sociological data. They encourage user participation in online communities without considering cultural production and co-creation.

According to the Proteus effect, it isn’t just we that can alter and shape our digital self-representations; people, in turn, undergo real-life behavioural shifts as a result of digital experiences avatars have.

“Culture is dynamic, interactive, and adaptive. Getting developers attuned to the quick cultural production and having a framework that understands the world as a dynamic living social system is crucial,” says Taylor.

“Savvy developers understand the world will change and iterate based on its users, and they put processes like basic guardrails into place, attending to its emergence. In gaming, the smartest developers realise games are social platforms where users construct their preferred experiences.”

Online worlds require three layers: Technical infrastructure for catching bad actors, governance defining crime or unfavourable behaviour, and community management. Taylor says even if the system does give users tools, they’re naturally inclined to manage themselves, from formal policy to informal norms like dispute resolution.

“In the early MMORPG, EverQuest, top guilds used third-party websites to set up rating calendars negotiated across competing guilds for a dragon they desired to kill,” recalled Taylor. “Since the game offered no method for the guilds to navigate scarce resources, they found a way to organise using third-party sites.”

What is the rule of law?

The challenge every worldmaker contends with is stopping online crime, or at least containing it. But who is responsible for cyberspace law and order, and how is it defined?

In basic terms, the rule of law has two functions: To deter malevolence, and correct human behaviour. It is both proactive and reactive, establishing ground rules and consequences. In physical space, it’s largely reactive. Crime is an action against established law that harms public welfare or morals, but what happens in a LambdaMOO situation, where no defined rules or morals exist?

In his 1689 Second Treatise on Government, philosopher John Locke promoted the idea of a social contract, where the governed give up some freedom in exchange for having certain “natural rights” protected by a government. In this case, a government's power stems from the consent of the governed. This model affects perceptions of what we consider rule of law to be.

Cyberspace has varied definitions of a “social contract,” and three possible arbiter types: Developers, real-world law enforcement, and users. Regardless of the arbiter, the will to surrender natural rights or control must be inherent for governance to work. Which entity should accept this natural rights exchange is unclear.

In his 1999 book, Code and Other Laws of Cyberspace, Harvard Law School professor Lawrence Lessig argued that code amounts to law in cyberspace technologies, making software designers the arbiters of what is allowed to exist in virtual spaces. If a platform has paying subscribers, it’s in its interest to police bad behaviour. If users are harassed, attacked, or threatened, they will likely leave, increasing churn. Developers could theoretically code crime out of these worlds, but this requires a kind of omnipresence on their part.

Developers can’t stop everything. But they can program social conventions, such as blocking options and personal space bubbles, like those found in VRChat. Tech companies can hire diverse developer teams and product managers to understand systemic and social vulnerabilities. But they can't code out every possible harm. Anyway, would they want to be the final arbiters?

In 2001, popular fantasy MMORPG Ultima Online’s Brittania world suffered a wave of extinctions and increased crime following a period of “self-policing.” A player-killing problem prompted developer arbiters to apply a redder hue to killers’ names (similar to Megan's Law, a US federal law that makes registered sex offender information public) to alert new players and the community. The killers attacked each other to restore their reputations, so developers eventually made killing impossible except in certain zones.

In 2003, Second Life became the first world governed by Community Standards, as defined in Terms of Service all users must accept. These contain punitive measures: Temporary suspensions, restricted access, or account termination for repeat offenders, who often migrate to other worlds and continue offending. Some developers give users punitive action decisions with “flag this content as abusive” buttons, like High Fidelity’s personal space bubbles.

This approach has become common, though it's hardly a deterrent to bad behaviour. What’s more, such options are inconsistent across worlds.

Our current recommendations for virtual world order. Consider it a draft.

Technical standards and new statehood

The metaverse is currently a hypothetical iteration of the internet, where users can have blended, extended, or mixed-reality experiences in different worlds. Moving seamlessly between them, with the same avatar or items (as in the 2018 Spielberg film Ready Player One), remains science fiction; interoperability requires certain agnostic standards.

In 2022, Meta, Microsoft, the World Wide Web Consortium (W3C), and chipmakers discussed imposing similar W3C compatibility standards at the Metaverse Standards Forum. Progress has been made in this regard: The WebXR open protocol connects VR or Metaverse platforms through browsers, and blockchain offers opportunities to register user information, including a virtual metadata “ID,” for avatars and other possessions (skins).

When interoperability happens, governance concepts will require evolution, including potential API law enforcement standards allowing police to connect to various platforms.

After the Second World War in Europe, a switch to nation-states occurred after centuries of city-states being the prevalent law systems. Collective national consciousness and nation-building became the foremost focus of governments. The metaverse could recast notions of statehood yet again.

Michal Rozynek suggests the “borders” of nations can easily be transplanted to online worlds, where feelings of national identity can be shaped and further mobilised. With this in mind, virtual worlds seeking better governance systems may transplant familiar border-guarding methods from physical space, offering passports and border control systems, excluding certain groups, further decentralising the metascape.

Perhaps centralisation will be replaced by community vetting, as Web3 approaches suggest, since members of launchpad communities use tokenomics to vet each other and new cryptocurrency offerings. Snow Crash, a 1992 science fiction novel by Neal Stephenson, seems prescient here. The story takes place in 21st Century Los Angeles, after a worldwide economic collapse initiated by private organisations and entrepreneurs, where mercenary armies and private security guards become the government.

Real-world law enforcement

A widely cited 2001 paper by economist Edward Castronova examines governance via EverQuest’s world Norrath. A government appears briefly at the start, to ensure equal asset distribution as new players enter a world with limited resources. Then they back away, giving the virtual economy free range. While this advocates for limited government in online worlds, the last two decades demonstrate the limits of this model.

In 2007, one Second Life avatar allegedly raped another. Internet bloggers dismissed the simulated attack as cyberspace play, but police in Belgium opened an investigation against the perpetrator. In 2021, user Nina Patel described being “virtually gang raped” within 60 seconds of joining Meta’s Horizon Venues. Prosecuting a rape currently requires being touched in the real world. In contrast, an avatar is virtual, though research repeatedly demonstrates that violent virtual acts affect our peripheral and central nervous systems.

What role does traditional policing play when players are pushed into walls or nearby objects? Consider other extremes, where a virtual ISIS caliphate uses online spaces for recruitment, or someone reconstructs a virtual concentration camp in a space dedicated to children—which happened in Roblox? Without metaverse standards, new states can also operate independently, with little real government oversight.

Nested deep in secure servers at Interpol is a virtual reality environment, where officers engage in different metaverses to help better anticipate and understand possible crimes. News of this programme followed reports about how easy it is for children to access virtual strip clubs; in one case, a researcher posed as a 13-year-old girl and experienced grooming, sexual material, racist insults and a rape threat.

With 195 member states, Interpol sees itself as a policing leader. Secretary General Jurgen Stock told BBC News in early 2023 that the global agency wishes to better understand how to police crime in Meta Horizon Worlds, since officials find it perplexing to try applying existing definitions of physical crime to non-physical space.

According to a Europol report, Denmark, Norway, and Sweden collaborated to create an online policing law enforcement working group for European agencies to share experiences and tools. In 2015, Norway started “Nettpatrulje” or “internet patrols,” accessible on social media, gaming, and streaming platforms, to “share information, receive tips, and carry out police work online.” An online patrol exists in every police district, and it has over 800k followers on TikTok (@politivest) as of this writing. During COVID-19 lockdowns, French children’s aid group L’Enfant Bleu launched a futuristic blue-winged avatar into the game Fortnite, to encourage children suffering abuse to share stories.

Scott Jacques, professor of criminology and criminal justice at Georgia State University, started the now-paused Metaverse Society of Criminology to educate 2D online world users about the Metaverse by meeting them via an avatar.

“We’re not near to handling stage one of metaverse crimes because we have not yet perfected stage one of internet crimes,” explained Jacques’ avatar. “Maybe when we get to a more ubiquitous metaverse world, we will.”

Jacques says that while users naturally need to create order, tech regulations will provide a more macro direction. “If the future of the metaverse is siloed in centralisation, then it will be like social media, then platforms will say, ‘Hey, we can't control this content.’ If it is decentralised, it would be much clearer that we would need a third party.”

How the current game of arbiter roulette evolves will rely on the effective execution of two strategies: Deterrence—scaring people into good behaviour by threatening them, for example with account suspensions or bans; and proterrence—scaring people into policing others’ behaviour, like governments pressuring tech companies to self-police by threatening them with sanctions.

“Designers can get rid of opportunities, like getting rid of certain body parts on avatars to reduce incidents of groping, but tech companies are not generally accountable,” says Jacques. “We will have to scare the people in charge of the technology into stopping bad behaviour.”

Germany’s Network Enforcement Act, NetzG (Netzwerkdurchsetzungsgesetz) took full effect in 2018 and requires social media platforms with over two million users to remove "clearly illegal" content within 24 hours, and all illegal content within seven days of the posting, or face a maximum fine of €50 million. Deleted content must also be stored for at least ten weeks, and platforms must submit transparency reports on illegal content every six months. NetzG faced criticism by politicians, human rights groups, journalists, and academics for incentivising the censorship of lawful expression.

In contrast, Section 230 of the US Communications Decency Act protects platform owners from liability for third-party content. Proponents see it as necessary for upholding First Amendment free speech rights. Protections for Google/YouTube and Twitter (X) were upheld in two Supreme Court of the United States cases: Gonzalez v. Google LLC, where the plaintiff alleged Google's YouTube platform radicalised ISIS recruits through its recommendation algorithm; and Twitter, Inc. v. Taamneh, where Twitter (X) allegedly aided and abetted an Istanbul nightclub attack by allowing ISIS to recruit and spread propaganda.

An amicus curiae, presented to the Court by Reddit moderators, stressed, in line with Section 230, that online platforms should have flexibility to make content moderation decisions based on community standards and guidelines, rather than following a one-size-fits-all approach. This makes a strong case for user arbiters.

The metaverse and Web3 call for increased self-reliance. User moderation is a hallowed internet practice that remains widespread, and the model often yields intricate, remarkable systems of governance or community hierarchies, where members are nominated to take on de facto law enforcement roles.

“Without developers or police attending to communities, people who want the best for their online lives step in to fill the gap. On Twitch, for example, users are trying to cultivate healthy channels. I always hope their groundbreaking work does not get lost. Their invisible labour maintains online spaces,” says Taylor.

In some ways, cyberspace citizen governance resembles Native American tribal orders where, for thousands of years, tribes self-governed through laws, cultural traditions, religious customs, kinship systems, and clans. Many operate democratically, distributing power amongst a chief and tribal council, and providing a community structure.

Policing all virtual worlds is increasingly impossible, due to different technical standards. Law enforcement cannot expect to be present, or effective, in the entire—and largely still dispersed—metaverse. For now, our cyberspace arbiter roulette continues.

But the metaverse will keep evolving. As it does, each arbiter group—developers, law enforcement, and community members—will require enhanced cooperation, and share certain standards, to cultivate responsive models of virtual safety that somehow remain porous enough to accommodate us all.

07 Mar 2024

-

Jessica Buchleitner

Illustration by Thomas Travert.

Receive weekly digests on the future of technology.

02/03

Related Insights

03/03

L’Atelier is a data intelligence company based in Paris.

We use advanced machine learning and generative AI to identify emerging technologies and analyse their impact on countries, companies, and capital.